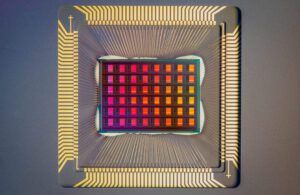

The NeuRRAM chip [Photo by David Baillot for the University of California San Diego]

Medical devices could one day get a boost from a new energy-efficient semiconductor designed with AI computing in mind.

Stanford engineers have developed a new resistive random-access memory (RRAM) chip called NeuRRAM that does AI processing within the chip’s memory, saving the battery power traditionally spent moving data between the processor and storage.

“The data movement issue is similar to spending eight hours in commute for a two-hour workday,” Weier Wan, a recent graduate at Stanford leading this project, said in a news release. “With our chip, we are showing a technology to tackle this challenge.”

They say their compute-in-memory (CIM) chip is about the size of a fingertip and does more work with limited battery power than current chips. That makes the new chip a potential space-saver for medical devices in home monitoring and other applications as they increasingly gain AI capabilities and cloud connectivity.

“Having those calculations done on the chip instead of sending information to and from the cloud could enable faster, more secure, cheaper and more scalable AI going into the future, and give more people access to AI power,” Stanford School of Engineering Professor H.-S. Philip Wong said in the release.

Wong and his co-authors recently published their work in Nature. The Stanford researchers designed the NeuRRAM chip in collaboration with the lab of Gert Cauwenberghs at the University of California, San Diego.

Compute-in-memory is not a new concept, but the researchers say their chip actually demonstrates AI application on hardware rather than just a simulation.