[Image from Pixabay]

Whether it’s OpenAI’s ChatGPT, Microsoft’s new Bing or Google’s Bard, 2023 is the year when generative artificial intelligence entered the popular consciousness.

In the medtech space, it seems as though every company is seeking ways to incorporate some form of AI into the digital features of their products and services.

So what is artificial intelligence good at so far when it comes to advancing medtech and healthcare in general? Here are seven recent examples:

1. Helping physicians identify medical problems quickly

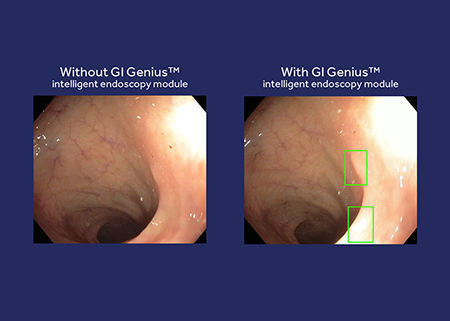

GI Genius’ AI-based enhancements place green boxes around areas that may need extra scrutiny during a colonoscopy, helping to prevent physicians from losing their focus. [Image courtesy of Medtronic]

Interest is growing in artificial intelligence that can help radiologists, gastroenterologists and others better spot potential diseases in the countless images they examine each day.

For example, GI Genius is an AI-assisted colonoscopy tool designed to help detect polyps that may lead to colorectal cancer. Medtronic is the exclusive worldwide distributor of the technology, which was developed and manufactured by Cosmo Pharmaceuticals. Giovanni Di Napoli, president of the Gastrointestinal business at Medtronic, showed GI Genius video at our DeviceTalks West event in Santa Clara, California, this past October. He described how there’s a decrease in lesion detection from morning through the afternoon when it comes to getting a colonoscopy. GI Genius’ AI-based enhancements change the game by placing green boxes around areas that may need extra scrutiny during the diagnostic imaging procedure, helping to prevent physicians from losing their focus.

GI Genius could be set to get even better. Medtronic has partnered with Nvidia to enhance the tool’s AI capabilities, allowing third-party developers to train and validate their AI models using the GI Genius AI Access platform. This technology has the potential to improve patient outcomes and reduce medical variability, while transforming the way healthcare approaches the early detection of colorectal cancer.

In other recent news, Viz.ai has secured FDA clearance for its artificial intelligence-powered algorithm designed to detect and triage care for suspected abdominal aortic aneurysms (AAAs). The Viz.ai system automatically analyzes computed tomography angiography (CTA) scans for suspected AAAs. It alerts clinicians, displaying patient scans on their mobile devices for real-time communication among specialists. Jayme Strauss, chief clinical officer at Viz.ai, thinks Viz AAA will enable care teams to prevent catastrophic aortic emergencies, such as aortic rupture, by increasing surveillance. Viz.ai plans to continue expanding its healthcare offerings.

2. Enabling better and more discreet health monitoring

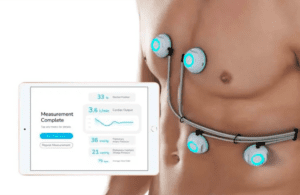

Sensydia’s AI-powered Cardiac Performance System (CPS) uses ultra-sensitive biosensors for the rapid, non-invasive measurement of a number of cardiac metrics. [Image courtesy of Sensydia]

It is hard these days to find a digital health or health monitoring company that is not talking about incorporating artificial intelligence into its offerings. AI has the potential to enable more discreet sensor arrays. The result is that doctors and patients to gain insights from the monitoring with minimal hassle. For example, Sensydia has completed enrollment in its 225-subject development study for its AI-powered Cardiac Performance System (CPS). The Los Angeles–based company has designed the AI-based, non-invasive CPS to use heart sound analysis. It may enable earlier detection and improved therapy guidance for heart failure and pulmonary hypertension patients. The CPS uses ultra-sensitive biosensors for the rapid, non-invasive measurement of a number of cardiac metrics, such as ejection fraction, cardiac output, pulmonary artery pressure, and pulmonary capillary wedge pressure. Normally, patients need echocardiography and invasive catheterization to obtain these measurements, according to the company.

Meanwhile, Biofourmis (Boston) has AI-driven software to collect and analyze patient data in real-time to identify shifts that require proactive interventions. It has a partnership with the Japanese pharma giant Chugai to objectively measure pain in women with endometriosis. Their strategy is to use a biosensor and an AI-based algorithm utilizing Biofourmis’ Biovitals.

3. AI-enabled prosthetics

The Myo Plus system [Image courtesy of Ottobock]

Advanced AI algorithms hold promise to better enable people to use myoelectric control — involving discreet electric signals from their muscles — to control robotic prosthetics. For example, Ottobock has its Myo Plus pattern recognition system for trans-radial users. Myo Plus is meant to allow the system to adjust to natural movements versus requiring the user to adapt to the system.

4. Better visualization during surgery

Activ Surgical announced in January that it had completed the first AI-enabled surgery using its ActivSight Intelligent Light product. Designed to provide enhanced visualization and real-time, on-demand surgical insights in the operating room, the ActivSight model attaches to laparoscopic and robotic systems and integrates with standard monitors. The first AI-enabled ActivSight surgery took place on December 22, 2022, at the Ohio State University Wexner Medical Center. Dr. Matthew Kalady performed a laparoscopic left colectomy using the colorectal AI mode within ActivSight. The company says its goal is for every surgical imaging system to deliver intelligent information, reducing surgical complication rates.

The Activ Sight system [Image courtesy of Activ Surgical]

“While using one of ActivSight’s intraoperative visual overlays, the dye-free ActivPerfusion Mode, I was able to clearly see key critical structures in the surgical site and tissue perfusion in real-time,” Kalady said in a news release. “With the press of a button, the colorectal AI mode was enabled, removing distractions of background signals from non-bowel tissue and clearly focusing on perfusion to the colon. There was a clear difference in visualization during AI mode.”

5. Improved data collection from medical settings

Proprio designed its Paradigm system to help surgeons better visualize spinal surgeries. [Photo courtesy of Proprio]

Artificial intelligence could help with surgical data collection. A case in point is Proprio, which is rolling its AI-powered surgical navigation system into operating rooms to collect data. The idea is that the data will ultimately help surgeons improve how they perform procedures. The Seattle-based startup said it has placed its Paradigm system in several U.S. operating rooms to capture surgical data that will useful for accelerating the system’s development.

6. Making better sense of patient data

GE HealthCare last year unveiled its Edison Digital Health Platform, an AI-powered vendor-agnostic hosting and data aggregation platform. The medtech giant said the aim of the platform is to enable the easy deployment of clinical, workflow, analytics, and AI tools, connecting devices and other data sources into an aggregated clinical data layer. The open and published interfaces on Edison support the integration of healthcare providers and third-party developers’ applications into existing workflows. GE HealthCare had plans to integrate and deploy its own apps onto the platform. A company official said the goal was for clinicians to have actionable insights at their fingertips to help better serve their patients.

7. The ability to crack tough scientific problems — and enable countless innovations

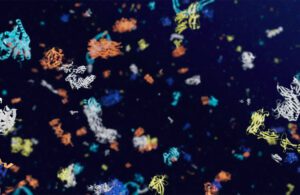

DeepMind’s AlphaFold artificial intelligence predicted the structure of more than 200 million proteins. [Image courtesy of DeepMind]

DeepMind, which has the same parent company as Google, has an artificial intelligence system AlphaFold that cracked an extremely difficult scientific problem. It predicted the structure of more than 200 million proteins, which represent almost every known cataloged protein. AlphaFold and the European Bioinformatics Institute have expanded the database by more than 200-fold to over 200 million structures. As our fellow WTWH Media site Drug Discovery & Development reported last year, AlphaFold is capable of making it possible to search for the 3D structure of proteins almost as easily as doing a keyword Google search. It has also made the data freely available to users for any purpose. Applications of the AI system include accelerating the discovery of drugs for neglected diseases, combating antibiotic resistance, studying the nuclear pore complex, developing a novel malaria vaccine, shedding light on genetic variation, gauging the impact of rotavirus on gastroenteritis, and contributing to drug development for cancer and neurological disease.